多可用区环境配置示例¶

在本页中,我们将提供一个示例配置,该配置可用于具有多个可用区的生产环境。

它将是 路由环境示例 的扩展和更具体的版本,因此预计您了解其中定义的概念和方法。

为了更好地理解为什么在示例中应用了一些配置选项,建议您也查阅 配置清单

通用设计¶

在下面的示例中,做出了以下设计决策

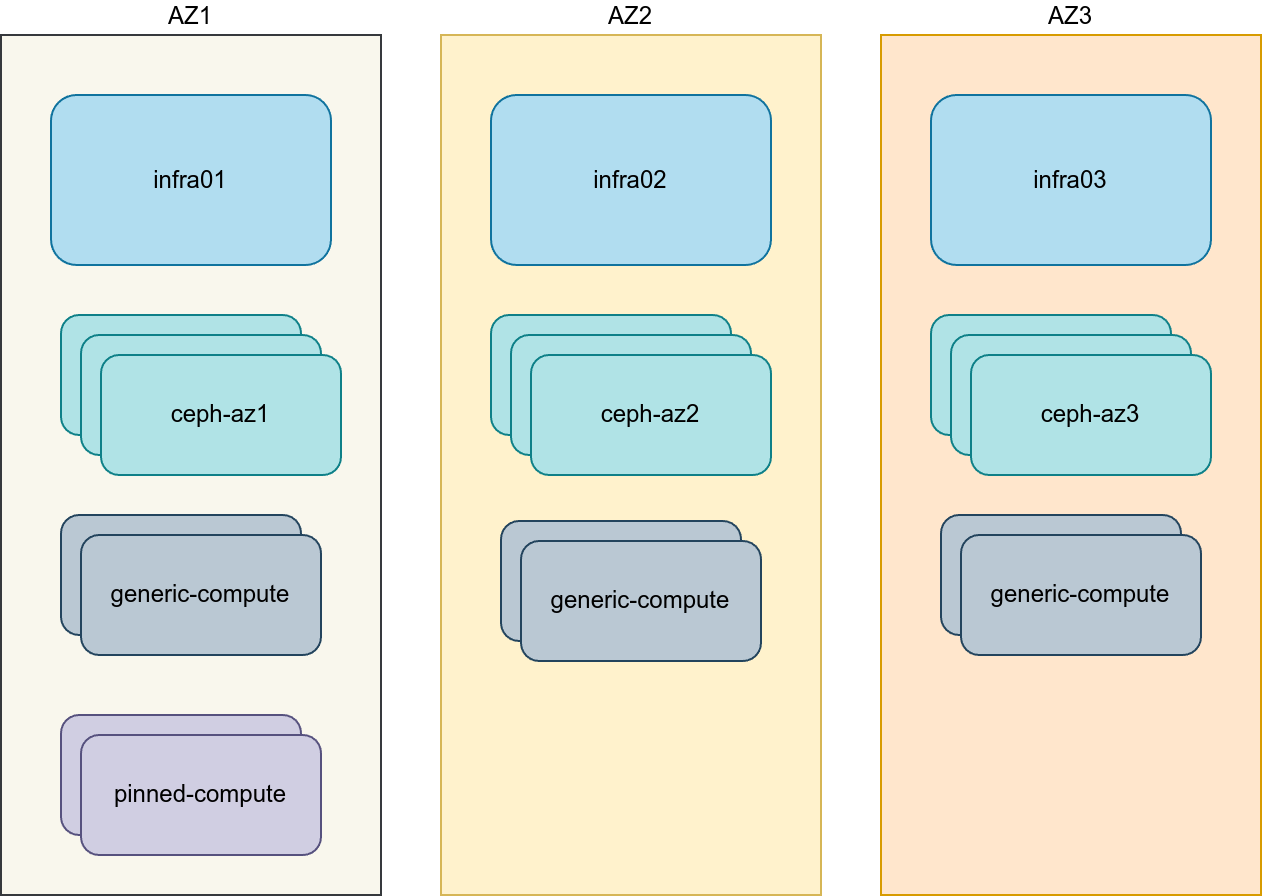

三个可用区 (AZ)

三个基础设施(控制平面)主机,每个主机放置在不同的可用区

八个计算主机,每个可用区 2 个计算主机。第一个可用区有额外的两个计算主机用于固定 CPU 集群。

使用 Ceph Ansible 预置三个 Ceph 存储集群。

计算主机充当 OVN 网关主机

可用区之间可达的隧道网络

公共 API、OpenStack 外部和管理网络表示为可用区之间的扩展 L2 网络。

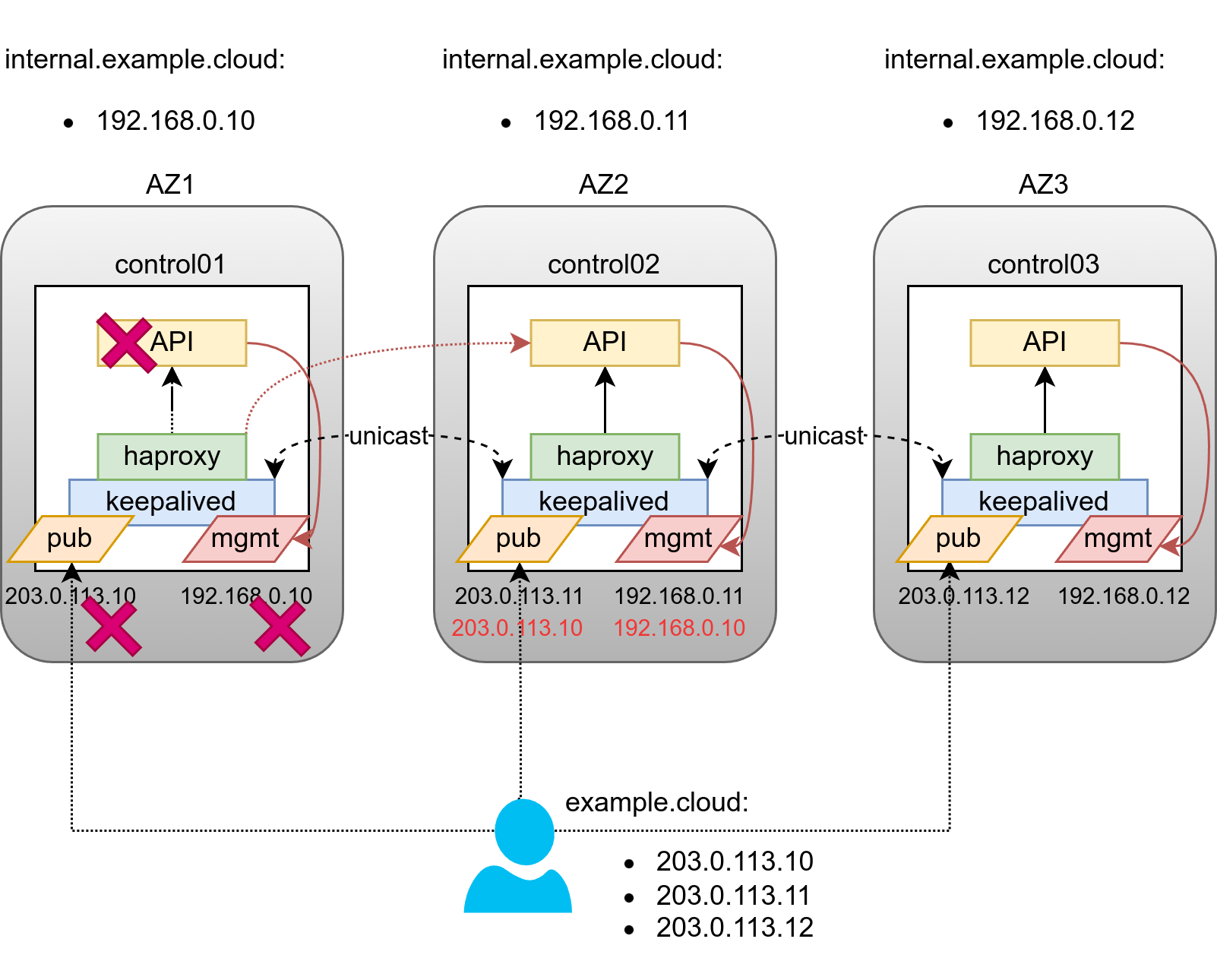

负载均衡¶

负载均衡器 (HAProxy) 通常部署在基础设施主机上。由于基础设施主机分布在不同的可用区,我们需要提出一个更复杂的设计,旨在解决以下问题

承受单个可用区故障

减少跨可用区流量

在可用区之间分配负载

为了应对这些挑战,对基本设计进行了以下更改

利用 DNS 轮询 (每个 AZ 一个 A/AAAA 记录) 用于公共 API

通过 /etc/hosts 覆盖定义内部 API FQDN,这些覆盖在每个可用区都是唯一的

定义 6 个 Keepalived 实例:3 个用于公共虚拟 IP 地址 (VIP),3 个用于内部虚拟 IP 地址

确保 HAProxy 优先选择来自自身可用区的后端,而不是“远程”后端

该示例还使用 Keepalived 在 LXC 容器中部署 HAProxy,与更传统的裸机部署相反。您可以查看 LXC 容器中的 HAProxy 和 Keepalived 以获取有关如何操作的更多详细信息。

存储设计复杂性¶

在存储未在可用区之间扩展的情况下组织存储时,存在多种复杂性。

首先,任何给定可用区只有一个控制器,而每个存储提供程序都需要运行多个副本的 cinder_volume 以实现高可用性。由于 cinder_volume 需要访问存储网络,因此最佳位置是 ceph-mon 主机。

另一个挑战是组织 Glance 镜像的共享存储,因为 rbd 不再能一致使用。虽然可以使用 Glance Interoperable Import 接口在 rbd 后端之间同步镜像,但实际上并非所有客户端和服务都能使用 Glance 的 import API。这里最明显的解决方案是使用 Swift API,同时配置 Ceph RadosGW 策略以在独立的实例之间复制存储桶,这些实例位于各自的可用区。

最后,但并非最不重要的复杂性是当禁用 cross_az_attach 时的 Nova 调度。由于 Nova 在直接从卷创建实例时不会将可用区添加到实例 request_specs 中,与提前手动创建卷并向实例创建 API 调用提供卷 UUID 不同。这种行为的问题是,Nova 会尝试将没有可用区在 request_specs 中的实例迁移或重新调度到其他可用区,这将导致失败,因为 cross_az_attach 已禁用。您可以在 Nova 的 bug 报告 中了解更多信息。为了规避此 Bug,您需要为 Nova 和 Cinder 设置 default_schedule_zone,这将确保始终在 request_specs 中定义 AZ。您还可以进一步定义实际的可用区作为 default_schedule_zone,使每个控制器都有自己的默认值。由于负载均衡器将尝试仅将请求发送到“本地”后端,因此这种方法可以有效地将新的 VM 分发到所有 AZ,当用户未显式提供 AZ 时。

配置示例¶

网络配置¶

网络 CIDR/VLAN 分配¶

此环境使用以下 CIDR 分配。

网络 |

CIDR |

VLAN |

|---|---|---|

管理网络 |

172.29.236.0/22 |

10 |

AZ1 存储网络 |

172.29.244.0/24 |

20 |

AZ1 隧道 (Geneve) 网络 |

172.29.240.0/24 |

30 |

AZ2 存储网络 |

172.29.245.0/24 |

21 |

AZ2 隧道 (Geneve) 网络 |

172.29.241.0/24 |

31 |

AZ3 存储网络 |

172.29.246.0/24 |

22 |

AZ3 隧道 (Geneve) 网络 |

172.29.242.0/24 |

32 |

公共 API VIP |

203.0.113.0/28 |

400 |

IP 分配¶

此环境使用以下主机名和 IP 地址分配。

主机名 |

管理 IP |

隧道 (Geneve) IP |

存储 IP |

|---|---|---|---|

infra1 |

172.29.236.11 |

||

infra2 |

172.29.236.12 |

||

infra3 |

172.29.236.13 |

||

az1_ceph1 |

172.29.237.201 |

172.29.244.201 |

|

az1_ceph2 |

172.29.237.202 |

172.29.244.202 |

|

az1_ceph3 |

172.29.237.203 |

172.29.244.203 |

|

az2_ceph1 |

172.29.238.201 |

172.29.245.201 |

|

az2_ceph2 |

172.29.238.202 |

172.29.245.202 |

|

az2_ceph3 |

172.29.238.203 |

172.29.245.203 |

|

az3_ceph1 |

172.29.239.201 |

172.29.246.201 |

|

az3_ceph2 |

172.29.239.202 |

172.29.246.202 |

|

az3_ceph3 |

172.29.239.203 |

172.29.246.203 |

|

az1_compute1 |

172.29.237.11 |

172.29.240.11 |

172.29.244.11 |

az1_compute2 |

172.29.237.12 |

172.29.240.12 |

172.29.244.12 |

az1_pin_compute1 |

172.29.237.13 |

172.29.240.13 |

172.29.244.13 |

az1_pin_compute2 |

172.29.237.14 |

172.29.240.14 |

172.29.244.14 |

az2_compute1 |

172.29.238.11 |

172.29.241.11 |

172.29.245.11 |

az2_compute2 |

172.29.238.12 |

172.29.241.12 |

172.29.245.12 |

az3_compute1 |

172.29.239.11 |

172.29.242.11 |

172.29.246.11 |

az3_compute3 |

172.29.239.12 |

172.29.242.12 |

172.29.246.12 |

主机网络配置¶

每个主机都需要实现正确的网络桥接。在本示例中,我们利用 systemd_networkd 角色,该角色在 openstack_hosts 执行期间为我们执行配置。它创建所有必需的 VLAN 和桥接。唯一的先决条件是通过 SSH 可用连接到主机,以便 Ansible 可以管理该主机。

注意

示例假定默认网关通过 bond0 接口设置,该接口聚合 eth0 和 eth1 链路。如果您的环境没有 eth0,而是有 p1p1 或其他接口名称,请确保将对 eth0 的引用替换为适当的名称。其他网络接口也适用。

---

# VLAN Mappings

_az_vlan_mappings:

az1:

management: 10

storage: 20

tunnel: 30

public-api: 400

az2:

management: 10

storage: 21

tunnel: 31

public-api: 400

az3:

management: 10

storage: 22

tunnel: 32

public-api: 400

# Bonding interfaces

_bond0_interfaces:

- eth0

- eth1

# NETDEV defenition

_systemd_networkd_default_devices:

- NetDev:

Name: vlan-mgmt

Kind: vlan

VLAN:

Id: "{{ _az_vlan_mappings[az_name]['management'] }}"

filename: 10-openstack-vlan-mgmt

- NetDev:

Name: bond0

Kind: bond

Bond:

Mode: 802.3ad

TransmitHashPolicy: layer3+4

LACPTransmitRate: fast

MIIMonitorSec: 100

filename: 05-general-bond0

- NetDev:

Name: "{{ management_bridge }}"

Kind: bridge

Bridge:

ForwardDelaySec: 0

HelloTimeSec: 2

MaxAgeSec: 12

STP: off

filename: "11-openstack-{{ management_bridge }}"

_systemd_networkd_storage_devices:

- NetDev:

Name: vlan-stor

Kind: vlan

VLAN:

Id: "{{ _az_vlan_mappings[az_name]['storage'] }}"

filename: 12-openstack-vlan-stor

- NetDev:

Name: br-storage

Kind: bridge

Bridge:

ForwardDelaySec: 0

HelloTimeSec: 2

MaxAgeSec: 12

STP: off

filename: 13-openstack-br-storage

_systemd_networkd_tunnel_devices:

- NetDev:

Name: vlan-tunnel

Kind: vlan

VLAN:

Id: "{{ _az_vlan_mappings[az_name]['tunnel'] }}"

filename: 16-openstack-vlan-tunnel

_systemd_networkd_pub_api_devices:

- NetDev:

Name: vlan-public-api

Kind: vlan

VLAN:

Id: "{{ _az_vlan_mappings[az_name]['public-api'] }}"

filename: 17-openstack-vlan-public-api

- NetDev:

Name: br-public-api

Kind: bridge

Bridge:

ForwardDelaySec: 0

HelloTimeSec: 2

MaxAgeSec: 12

STP: off

filename: 18-openstack-br-public-api

openstack_hosts_systemd_networkd_devices: |-

{% set devices = [] %}

{% if is_metal %}

{% set _ = devices.extend(_systemd_networkd_default_devices) %}

{% if inventory_hostname in (groups['compute_hosts'] + groups['storage_hosts']) %}

{% set _ = devices.extend(_systemd_networkd_storage_devices) %}

{% endif %}

{% if inventory_hostname in (groups[az_name ~ '_ceph_mon_hosts'] + groups[az_name ~ '_ceph_osd_hosts']) %}

{% set _ = devices.extend(_systemd_networkd_cluster_devices) %}

{% endif %}

{% if inventory_hostname in groups['compute_hosts'] %}

{% set _ = devices.extend(_systemd_networkd_tunnel_devices) %}

{% endif %}

{% if inventory_hostname in groups['haproxy_hosts'] %}

{% set _ = devices.extend(_systemd_networkd_pub_api_devices) %}

{% endif %}

{% endif %}

{{ devices }}

# NETWORK definition

# NOTE: this can work only in case management network has the same netmask as all other networks

# while in example manaement is /22 while rest are /24

# _management_rank: "{{ management_address | ansible.utils.ipsubnet(hostvars[inventory_hostname]['cidr_networks']['management']) }}"

_management_rank: "{{ (management_address | split('.'))[-1] }}"

# NOTE: `05` is prefixed to filename to have precedence over netplan

_systemd_networkd_bonded_networks: |-

{% set struct = [] %}

{% for interface in _bond0_interfaces %}

{% set interface_data = ansible_facts[interface | replace('-', '_')] %}

{% set _ = struct.append({

'interface': interface_data['device'],

'filename' : '05-general-' ~ interface_data['device'],

'bond': 'bond0',

'link_config_overrides': {

'Match': {

'MACAddress': interface_data['macaddress']

}

}

})

%}

{% endfor %}

{% set bond_vlans = ['vlan-mgmt'] %}

{% if inventory_hostname in (groups['compute_hosts'] + groups['storage_hosts']) %}

{% set _ = bond_vlans.append('vlan-stor') %}

{% endif %}

{% if inventory_hostname in groups['haproxy_hosts'] %}

{% set _ = bond_vlans.append('vlan-public-api') %}

{% endif %}

{% if inventory_hostname in groups['compute_hosts'] %}

{% set _ = bond_vlans.append('vlan-tunnel') %}

{% endif %}

{% set _ = struct.append({

'interface': 'bond0',

'filename': '05-general-bond0',

'vlan': bond_vlans

})

%}

{{ struct }}

_systemd_networkd_mgmt_networks:

- interface: "vlan-mgmt"

bridge: "{{ management_bridge }}"

filename: 10-openstack-vlan-mgmt

- interface: "{{ management_bridge }}"

address: "{{ management_address }}"

netmask: "{{ cidr_networks['management'] | ansible.utils.ipaddr('netmask') }}"

filename: "11-openstack-{{ management_bridge }}"

_systemd_networkd_storage_networks:

- interface: "vlan-stor"

bridge: "br-storage"

filename: 12-openstack-vlan-stor

- interface: "br-storage"

address: "{{ cidr_networks['storage_' ~ az_name] | ansible.utils.ipmath(_management_rank) }}"

netmask: "{{ cidr_networks['storage_' ~ az_name] | ansible.utils.ipaddr('netmask') }}"

filename: "13-openstack-br-storage"

_systemd_networkd_tunnel_networks:

- interface: "vlan-tunnel"

filename: 16-openstack-vlan-tunnel

address: "{{ cidr_networks['tunnel_' ~ az_name] | ansible.utils.ipmath(_management_rank) }}"

netmask: "{{ cidr_networks['tunnel_' ~ az_name] | ansible.utils.ipaddr('netmask') }}"

static_routes: |-

{% set routes = [] %}

{% set tunnel_cidrs = cidr_networks | dict2items | selectattr('key', 'match', 'tunnel_az[0-9]') | map(attribute='value') %}

{% set gateway = cidr_networks['tunnel_' ~ az_name] | ansible.utils.ipaddr('1') | ansible.utils.ipaddr('address') %}

{% for cidr in tunnel_cidrs | reject('eq', cidr_networks['tunnel_' ~ az_name]) %}

{% set _ = routes.append({'cidr': cidr, 'gateway': gateway}) %}

{% endfor %}

{{ routes }}

_systemd_networkd_pub_api_networks:

- interface: "vlan-public-api"

bridge: "br-public-api"

filename: 17-openstack-vlan-public-api

- interface: "br-public-api"

filename: "18-openstack-br-public-api"

openstack_hosts_systemd_networkd_networks: |-

{% set networks = [] %}

{% if is_metal %}

{% set _ = networks.extend(_systemd_networkd_mgmt_networks + _systemd_networkd_bonded_networks) %}

{% if inventory_hostname in (groups['compute_hosts'] + groups['storage_hosts']) %}

{% set _ = networks.extend(_systemd_networkd_storage_networks) %}

{% endif %}

{% if inventory_hostname in groups['compute_hosts'] %}

{% set _ = networks.extend(_systemd_networkd_tunnel_networks) %}

{% endif %}

{% if inventory_hostname in groups['haproxy_hosts'] %}

{% set _ = networks.extend(_systemd_networkd_pub_api_networks) %}

{% endif %}

{% endif %}

{{ networks }}

部署配置¶

环境定制¶

部署在 /etc/openstack_deploy/env.d 中的文件允许自定义 Ansible 组。

要部署容器中的 HAProxy,我们需要创建一个文件 /etc/openstack_deploy/env.d/haproxy.yml,内容如下

---

# This file contains an example to show how to set

# the cinder-volume service to run in a container.

#

# Important note:

# In most cases you need to ensure that default route inside of the

# container doesn't go through eth0, which is part of lxcbr0 and

# SRC nat-ed. You need to pass "public" VIP interface inside of the

# container and ensure "default" route presence on it.

container_skel:

haproxy_container:

properties:

is_metal: false

由于我们正在使用 Ceph 作为此环境,因此 cinder-volume 在 Ceph Monitor 主机上的容器中运行。为了实现这一点,请实现 /etc/openstack_deploy/env.d/cinder.yml,内容如下

---

# This file contains an example to show how to set

# the cinder-volume service to run in a container.

#

# Important note:

# When using LVM or any iSCSI-based cinder backends, such as NetApp with

# iSCSI protocol, the cinder-volume service *must* run on metal.

# Reference: https://bugs.launchpad.net/ubuntu/+source/lxc/+bug/1226855

container_skel:

cinder_volumes_container:

properties:

is_metal: false

为了能够仅对单个可用区中的主机执行 playbook,以及能够设置特定于 AZ 的变量,我们需要定义组定义。为此,创建一个文件 /etc/openstack_deploy/env.d/az.yml,内容如下

---

component_skel:

az1_containers:

belongs_to:

- az1_all

az1_hosts:

belongs_to:

- az1_all

az2_containers:

belongs_to:

- az2_all

az2_hosts:

belongs_to:

- az2_all

az3_containers:

belongs_to:

- az3_all

az3_hosts:

belongs_to:

- az3_all

container_skel:

az1_containers:

properties:

is_nest: true

az2_containers:

properties:

is_nest: true

az3_containers:

properties:

is_nest: true

上面的示例将创建以下组

azN_hosts,它将仅包含裸机节点azN_containers,它将包含在上面生成的裸机节点上生成的所有容器裸机节点。

azN_all,它将包含 azN_hosts 和 azN_containers 成员

我们还需要为 Ceph 定义一组全新的组,以部署多个独立的 Ceph 实例。

为此,创建一个文件 /etc/openstack_deploy/env.d/ceph.yml,内容如下

---

component_skel:

# Ceph MON

ceph_mon_az1:

belongs_to:

- ceph-mon

- ceph_all

- az1_all

ceph_mon_az2:

belongs_to:

- ceph-mon

- ceph_all

- az2_all

ceph_mon_az3:

belongs_to:

- ceph-mon

- ceph_all

- az3_all

# Ceph OSD

ceph_osd_az1:

belongs_to:

- ceph-osd

- ceph_all

- az1_all

ceph_osd_az2:

belongs_to:

- ceph-osd

- ceph_all

- az2_all

ceph_osd_az3:

belongs_to:

- ceph-osd

- ceph_all

- az3_all

# Ceph RGW

ceph_rgw_az1:

belongs_to:

- ceph-rgw

- ceph_all

- az1_all

ceph_rgw_az2:

belongs_to:

- ceph-rgw

- ceph_all

- az2_all

ceph_rgw_az3:

belongs_to:

- ceph-rgw

- ceph_all

- az3_all

container_skel:

# Ceph MON

ceph_mon_container_az1:

belongs_to:

- az1_ceph_mon_containers

contains:

- ceph_mon_az1

ceph_mon_container_az2:

belongs_to:

- az2_ceph_mon_containers

contains:

- ceph_mon_az2

ceph_mon_container_az3:

belongs_to:

- az3_ceph_mon_containers

contains:

- ceph_mon_az3

# Ceph RGW

ceph_rgw_container_az1:

belongs_to:

- az1_ceph_rgw_containers

contains:

- ceph_rgw_az1

ceph_rgw_container_az2:

belongs_to:

- az2_ceph_rgw_containers

contains:

- ceph_rgw_az2

ceph_rgw_container_az3:

belongs_to:

- az3_ceph_rgw_containers

contains:

- ceph_rgw_az3

# Ceph OSD

ceph_osd_container_az1:

belongs_to:

- az1_ceph_osd_containers

contains:

- ceph_osd_az1

properties:

is_metal: true

ceph_osd_container_az2:

belongs_to:

- az2_ceph_osd_containers

contains:

- ceph_osd_az2

properties:

is_metal: true

ceph_osd_container_az3:

belongs_to:

- az3_ceph_osd_containers

contains:

- ceph_osd_az3

properties:

is_metal: true

physical_skel:

# Ceph MON

az1_ceph_mon_containers:

belongs_to:

- all_containers

az1_ceph_mon_hosts:

belongs_to:

- hosts

az2_ceph_mon_containers:

belongs_to:

- all_containers

az2_ceph_mon_hosts:

belongs_to:

- hosts

az3_ceph_mon_containers:

belongs_to:

- all_containers

az3_ceph_mon_hosts:

belongs_to:

- hosts

# Ceph OSD

az1_ceph_osd_containers:

belongs_to:

- all_containers

az1_ceph_osd_hosts:

belongs_to:

- hosts

az2_ceph_osd_containers:

belongs_to:

- all_containers

az2_ceph_osd_hosts:

belongs_to:

- hosts

az3_ceph_osd_containers:

belongs_to:

- all_containers

az3_ceph_osd_hosts:

belongs_to:

- hosts

# Ceph RGW

az1_ceph_rgw_containers:

belongs_to:

- all_containers

az1_ceph_rgw_hosts:

belongs_to:

- hosts

az2_ceph_rgw_containers:

belongs_to:

- all_containers

az2_ceph_rgw_hosts:

belongs_to:

- hosts

az3_ceph_rgw_containers:

belongs_to:

- all_containers

az3_ceph_rgw_hosts:

belongs_to:

- hosts

环境布局¶

/etc/openstack_deploy/openstack_user_config.yml 文件定义了环境布局。

对于每个 AZ,都需要定义一个包含该 AZ 内所有主机的组。

在定义的提供程序网络中,address_prefix 用于覆盖添加到包含 IP 地址信息的每个主机的键的前缀。我们为 container、tunnel 或 storage 使用特定于 AZ 的前缀。reference_group 包含定义的 AZ 组的名称,用于将每个提供程序网络的范围限制为该组。

YAML 锚点和别名在下面的示例中被大量使用,以填充所有可能在不重复主机定义的情况下派上用场的所有组。您可以在 Ansible 文档 中了解有关此主题的更多信息

以下配置描述了此环境的布局。

---

cidr_networks: &os_cidrs

management: 172.29.236.0/22

tunnel_az1: 172.29.240.0/24

tunnel_az2: 172.29.241.0/24

tunnel_az3: 172.29.242.0/24

storage_az1: 172.29.244.0/24

storage_az2: 172.29.245.0/24

storage_az3: 172.29.246.0/24

public_api_vip: 203.0.113.0/28

used_ips:

# management network - openstack VIPs

- "172.29.236.1,172.29.236.30"

# management network - other hosts not managed by OSA dynamic inventory

- "172.29.238.0,172.29.239.255"

# storage network - reserved for ceph hosts

- "172.29.244.200,172.29.244.250"

- "172.29.245.200,172.29.245.250"

- "172.29.246.200,172.29.246.250"

# public_api

- "203.0.113.1,203.0.113.10"

global_overrides:

internal_lb_vip_address: internal.example.cloud

external_lb_vip_address: example.cloud

management_bridge: "br-mgmt"

cidr_networks: *os_cidrs

provider_networks:

- network:

group_binds:

- all_containers

- hosts

type: "raw"

container_bridge: "br-mgmt"

container_interface: "eth1"

container_type: "veth"

ip_from_q: "management"

is_management_address: true

- network:

container_bridge: "br-storage"

container_type: "veth"

container_interface: "eth2"

ip_from_q: "storage_az1"

address_prefix: "storage_az1"

type: "raw"

group_binds:

- cinder_volume

- nova_compute

- ceph_all

reference_group: "az1_all"

- network:

container_bridge: "br-storage"

container_type: "veth"

container_interface: "eth2"

ip_from_q: "storage_az2"

address_prefix: "storage_az2"

type: "raw"

group_binds:

- cinder_volume

- nova_compute

- ceph_all

reference_group: "az2_all"

- network:

container_bridge: "br-storage"

container_type: "veth"

container_interface: "eth2"

ip_from_q: "storage_az3"

address_prefix: "storage_az3"

type: "raw"

group_binds:

- cinder_volume

- nova_compute

- ceph_all

reference_group: "az3_all"

- network:

container_bridge: "vlan-tunnel"

container_type: "veth"

container_interface: "eth4"

ip_from_q: "tunnel_az1"

address_prefix: "tunnel"

type: "raw"

group_binds:

- neutron_ovn_controller

reference_group: "az1_all"

- network:

container_bridge: "vlan-tunnel"

container_type: "veth"

container_interface: "eth4"

ip_from_q: "tunnel_az2"

address_prefix: "tunnel"

type: "raw"

group_binds:

- neutron_ovn_controller

reference_group: "az2_all"

- network:

container_bridge: "vlan-tunnel"

container_type: "veth"

container_interface: "eth4"

ip_from_q: "tunnel_az3"

address_prefix: "tunnel"

type: "raw"

group_binds:

- neutron_ovn_controller

reference_group: "az3_all"

- network:

group_binds:

- haproxy

type: "raw"

container_bridge: "br-public-api"

container_interface: "eth20"

container_type: "veth"

ip_from_q: public_api_vip

static_routes:

- cidr: 0.0.0.0/0

gateway: 203.0.113.1

### conf.d configuration ###

# Control plane

az1_controller_hosts: &controller_az1

infra1:

ip: 172.29.236.11

az2_controller_hosts: &controller_az2

infra2:

ip: 172.29.236.12

az3_controller_hosts: &controller_az3

infra3:

ip: 172.29.236.13

# Computes

## AZ1

az1_shared_compute_hosts: &shared_computes_az1

az1_compute1:

ip: 172.29.237.11

az1_compute2:

ip: 172.29.237.12

az1_pinned_compute_hosts: &pinned_computes_az1

az1_pin_compute1:

ip: 172.29.237.13

az1_pin_compute2:

ip: 172.29.237.14

## AZ2

az2_shared_compute_hosts: &shared_computes_az2

az2_compute1:

ip: 172.29.238.11

az2_compute2:

ip: 172.29.238.12

## AZ3

az3_shared_compute_hosts: &shared_computes_az3

az3_compute1:

ip: 172.29.239.11

az3_compute2:

ip: 172.29.239.12

# Storage

## AZ1

az1_storage_hosts: &storage_az1

az1_ceph1:

ip: 172.29.237.201

az1_ceph2:

ip: 172.29.237.202

az1_ceph3:

ip: 172.29.237.203

## AZ2

az2_storage_hosts: &storage_az2

az2_ceph1:

ip: 172.29.238.201

az2_ceph2:

ip: 172.29.238.202

az2_ceph3:

ip: 172.29.238.203

## AZ3

az3_storage_hosts: &storage_az3

az3_ceph1:

ip: 172.29.239.201

az3_ceph2:

ip: 172.29.239.202

az3_ceph3:

ip: 172.29.239.203

# AZ association

az1_compute_hosts: &compute_hosts_az1

<<: [*shared_computes_az1, *pinned_computes_az1]

az2_compute_hosts: &compute_hosts_az2

<<: *shared_computes_az2

az3_compute_hosts: &compute_hosts_az3

<<: *shared_computes_az3

az1_hosts:

<<: [*compute_hosts_az1, *controller_az1, *storage_az1]

az2_hosts:

<<: [*compute_hosts_az2, *controller_az2, *storage_az2]

az3_hosts:

<<: [*compute_hosts_az3, *controller_az3, *storage_az3]

# Final mappings

shared_infra_hosts: &controllers

<<: [*controller_az1, *controller_az2, *controller_az3]

repo-infra_hosts: *controllers

memcaching_hosts: *controllers

database_hosts: *controllers

mq_hosts: *controllers

operator_hosts: *controllers

identity_hosts: *controllers

image_hosts: *controllers

dashboard_hosts: *controllers

compute-infra_hosts: *controllers

placement-infra_hosts: *controllers

storage-infra_hosts: *controllers

network-infra_hosts: *controllers

network-northd_hosts: *controllers

coordination_hosts: *controllers

compute_hosts: &computes

<<: [*compute_hosts_az1, *compute_hosts_az2, *compute_hosts_az3]

pinned_compute_hosts:

<<: *pinned_computes_az1

shared_compute_hosts:

<<: [*shared_computes_az1, *shared_computes_az2, *shared_computes_az3]

network-gateway_hosts: *computes

storage_hosts: &storage

<<: [*storage_az1, *storage_az2, *storage_az3]

az1_ceph_osd_hosts:

<<: *storage_az1

az2_ceph_osd_hosts:

<<: *storage_az2

az3_ceph_osd_hosts:

<<: *storage_az3

az1_ceph_mon_hosts:

<<: *storage_az1

az2_ceph_mon_hosts:

<<: *storage_az2

az3_ceph_mon_hosts:

<<: *storage_az3

az1_ceph_rgw_hosts:

<<: *storage_az1

az2_ceph_rgw_hosts:

<<: *storage_az2

az3_ceph_rgw_hosts:

<<: *storage_az3

用户变量¶

为了正确配置可用区,我们需要利用 group_vars 并定义每个 AZ 用于的可用区名称。为此,创建文件

/etc/openstack_deploy/group_vars/az1_all.yml/etc/openstack_deploy/group_vars/az2_all.yml/etc/openstack_deploy/group_vars/az3_all.yml

内容如下,其中 N 应根据文件号为 AZ 号码

az_name: azN

对于此环境,负载均衡器是在基础设施主机上的 LXC 容器中创建的,我们需要确保 eth0 接口上没有默认路由。为了防止这种情况发生,我们在 /etc/openstack_deploy/group_vars/haproxy/lxc_network.yml 文件中覆盖 lxc_container_networks

---

lxc_container_networks:

lxcbr0_address:

bridge: "{{ lxc_net_bridge | default('lxcbr0') }}"

bridge_type: "{{ lxc_net_bridge_type | default('linuxbridge') }}"

interface: eth0

type: veth

dhcp_use_routes: False

接下来,我们希望确保 HAProxy 始终指向被认为是“本地”的后端。为此,我们将平衡算法切换到 first 并重新排序后端,以便当前可用区的后端出现在列表的第一个位置。可以通过创建文件 /etc/openstack_deploy/group_vars/haproxy/backend_overrides.yml,内容如下

---

haproxy_drain: true

haproxy_ssl_all_vips: true

haproxy_bind_external_lb_vip_interface: eth20

haproxy_bind_internal_lb_vip_interface: eth1

haproxy_bind_external_lb_vip_address: "*"

haproxy_bind_internal_lb_vip_address: "*"

haproxy_vip_binds:

- address: "{{ haproxy_bind_external_lb_vip_address }}"

interface: "{{ haproxy_bind_external_lb_vip_interface }}"

type: external

- address: "{{ haproxy_bind_internal_lb_vip_address }}"

interface: "{{ haproxy_bind_internal_lb_vip_interface }}"

type: internal

haproxy_cinder_api_service_overrides:

haproxy_backend_nodes: "{{ groups['cinder_api'] | select('in', groups[az_name ~ '_containers']) | union(groups['cinder_api']) | unique | default([]) }}"

haproxy_balance_alg: first

haproxy_limit_hosts: "{{ groups['haproxy_all'] | intersect(groups[az_name ~ '_all']) }}"

haproxy_horizon_service_overrides:

haproxy_backend_nodes: "{{ groups['horizon_all'] | select('in', groups[az_name ~ '_containers']) | union(groups['horizon_all']) | unique | default([]) }}"

haproxy_balance_alg: first

haproxy_limit_hosts: "{{ groups['haproxy_all'] | intersect(groups[az_name ~ '_all']) }}"

haproxy_keystone_service_overrides:

haproxy_backend_nodes: "{{ groups['keystone_all'] | select('in', groups[az_name ~ '_containers']) | union(groups['keystone_all']) | unique | default([]) }}"

haproxy_balance_alg: first

haproxy_limit_hosts: "{{ groups['haproxy_all'] | intersect(groups[az_name ~ '_all']) }}"

haproxy_neutron_server_service_overrides:

haproxy_backend_nodes: "{{ groups['neutron_server'] | select('in', groups[az_name ~ '_containers']) | union(groups['neutron_server']) | unique | default([]) }}"

haproxy_balance_alg: first

haproxy_limit_hosts: "{{ groups['haproxy_all'] | intersect(groups[az_name ~ '_all']) }}"

haproxy_nova_api_compute_service_overrides:

haproxy_backend_nodes: "{{ groups['nova_api_os_compute'] | select('in', groups[az_name ~ '_containers']) | union(groups['nova_api_os_compute']) | unique | default([]) }}"

haproxy_balance_alg: first

haproxy_limit_hosts: "{{ groups['haproxy_all'] | intersect(groups[az_name ~ '_all']) }}"

haproxy_nova_api_metadata_service_overrides:

haproxy_backend_nodes: "{{ groups['nova_api_metadata'] | select('in', groups[az_name ~ '_containers']) | union(groups['nova_api_metadata']) | unique | default([]) }}"

haproxy_balance_alg: first

haproxy_limit_hosts: "{{ groups['haproxy_all'] | intersect(groups[az_name ~ '_all']) }}"

haproxy_placement_service_overrides:

haproxy_backend_nodes: "{{ groups['placement_all'] | select('in', groups[az_name ~ '_containers']) | union(groups['placement_all']) | unique | default([]) }}"

haproxy_balance_alg: first

haproxy_limit_hosts: "{{ groups['haproxy_all'] | intersect(groups[az_name ~ '_all']) }}"

haproxy_repo_service_overrides:

haproxy_backend_nodes: "{{ groups['repo_all'] | select('in', groups[az_name ~ '_containers']) | union(groups['repo_all']) | unique | default([]) }}"

haproxy_balance_alg: first

haproxy_limit_hosts: "{{ groups['haproxy_all'] | intersect(groups[az_name ~ '_all']) }}"

我们还需要定义几个额外的 Keepalived 实例,以保护 DNS RR 方法,同时将 Keepalived 配置为单播模式。为此,创建一个文件 /etc/openstack_deploy/group_vars/haproxy/keepalived.yml,内容如下

---

haproxy_keepalived_external_vip_cidr_az1: 203.0.113.5/32

haproxy_keepalived_external_vip_cidr_az2: 203.0.113.6/32

haproxy_keepalived_external_vip_cidr_az3: 203.0.113.7/32

haproxy_keepalived_internal_vip_cidr_az1: 172.29.236.21/32

haproxy_keepalived_internal_vip_cidr_az2: 172.29.236.22/32

haproxy_keepalived_internal_vip_cidr_az3: 172.29.236.23/32

haproxy_keepalived_external_interface: "{{ haproxy_bind_external_lb_vip_interface }}"

haproxy_keepalived_internal_interface: "{{ haproxy_bind_internal_lb_vip_interface }}"

keepalived_unicast_peers:

internal: |-

{% set peers = [] %}

{% for addr in groups['haproxy'] | map('extract', hostvars, ['container_networks', 'management_address']) %}

{% set _ = peers.append((addr['address'] ~ '/' ~ addr['netmask']) | ansible.utils.ipaddr('host/prefix')) %}

{% endfor %}

{{ peers }}

external: |-

{% set peers = [] %}

{% for addr in groups['haproxy'] | map('extract', hostvars, ['container_networks', 'public_api_vip_address']) %}

{% set _ = peers.append((addr['address'] ~ '/' ~ addr['netmask']) | ansible.utils.ipaddr('host/prefix')) %}

{% endfor %}

{{ peers }}

keepalived_internal_unicast_src_ip: >-

{{ (management_address ~ '/' ~ container_networks['management_address']['netmask']) | ansible.utils.ipaddr('host/prefix') }}

keepalived_external_unicast_src_ip: >-

{{ (container_networks['public_api_vip_address']['address'] ~ '/' ~ container_networks['public_api_vip_address']['netmask']) | ansible.utils.ipaddr('host/prefix') }}

keepalived_instances:

az1-external:

interface: "{{ haproxy_keepalived_external_interface | default(management_bridge) }}"

state: "{{ (inventory_hostname in groups['az1_all']) | ternary('MASTER', 'BACKUP') }}"

virtual_router_id: 40

priority: "{{ (inventory_hostname in groups['az1_all']) | ternary(200, (groups['haproxy']|length-groups['haproxy'].index(inventory_hostname))*50) }}"

vips:

- "{{ haproxy_keepalived_external_vip_cidr_az1 | default('169.254.1.1/24') }} dev {{ haproxy_keepalived_external_interface | default(management_bridge) }}"

track_scripts: "{{ keepalived_scripts | dict2items | json_query('[*].{name: key, instance: value.instance}') | rejectattr('instance', 'equalto', 'internal') | map(attribute='name') | list }}"

unicast_src_ip: "{{ keepalived_external_unicast_src_ip }}"

unicast_peers: "{{ keepalived_unicast_peers['external'] | difference([keepalived_external_unicast_src_ip]) }}"

az1-internal:

interface: "{{ haproxy_keepalived_internal_interface | default(management_bridge) }}"

state: "{{ (inventory_hostname in groups['az1_all']) | ternary('MASTER', 'BACKUP') }}"

virtual_router_id: 41

priority: "{{ (inventory_hostname in groups['az1_all']) | ternary(200, (groups['haproxy']|length-groups['haproxy'].index(inventory_hostname))*50) }}"

vips:

- "{{ haproxy_keepalived_internal_vip_cidr_az1 | default('169.254.2.1/24') }} dev {{ haproxy_keepalived_internal_interface | default(management_bridge) }}"

track_scripts: "{{ keepalived_scripts | dict2items | json_query('[*].{name: key, instance: value.instance}') | rejectattr('instance', 'equalto', 'external') | map(attribute='name') | list }}"

unicast_src_ip: "{{ keepalived_internal_unicast_src_ip }}"

unicast_peers: "{{ keepalived_unicast_peers['internal'] | difference([keepalived_internal_unicast_src_ip]) }}"

az2-external:

interface: "{{ haproxy_keepalived_external_interface | default(management_bridge) }}"

state: "{{ (inventory_hostname in groups['az2_all']) | ternary('MASTER', 'BACKUP') }}"

virtual_router_id: 42

priority: "{{ (inventory_hostname in groups['az2_all']) | ternary(200, (groups['haproxy']|length-groups['haproxy'].index(inventory_hostname))*50) }}"

vips:

- "{{ haproxy_keepalived_external_vip_cidr_az2 | default('169.254.1.1/24') }} dev {{ haproxy_keepalived_external_interface | default(management_bridge) }}"

track_scripts: "{{ keepalived_scripts | dict2items | json_query('[*].{name: key, instance: value.instance}') | rejectattr('instance', 'equalto', 'internal') | map(attribute='name') | list }}"

unicast_src_ip: "{{ keepalived_external_unicast_src_ip }}"

unicast_peers: "{{ keepalived_unicast_peers['external'] | difference([keepalived_external_unicast_src_ip]) }}"

az2-internal:

interface: "{{ haproxy_keepalived_internal_interface | default(management_bridge) }}"

state: "{{ (inventory_hostname in groups['az2_all']) | ternary('MASTER', 'BACKUP') }}"

virtual_router_id: 43

priority: "{{ (inventory_hostname in groups['az2_all']) | ternary(200, (groups['haproxy']|length-groups['haproxy'].index(inventory_hostname))*50) }}"

vips:

- "{{ haproxy_keepalived_internal_vip_cidr_az2 | default('169.254.2.1/24') }} dev {{ haproxy_keepalived_internal_interface | default(management_bridge) }}"

track_scripts: "{{ keepalived_scripts | dict2items | json_query('[*].{name: key, instance: value.instance}') | rejectattr('instance', 'equalto', 'external') | map(attribute='name') | list }}"

unicast_src_ip: "{{ keepalived_internal_unicast_src_ip }}"

unicast_peers: "{{ keepalived_unicast_peers['internal'] | difference([keepalived_internal_unicast_src_ip]) }}"

az3-external:

interface: "{{ haproxy_keepalived_external_interface | default(management_bridge) }}"

state: "{{ (inventory_hostname in groups['az3_all']) | ternary('MASTER', 'BACKUP') }}"

virtual_router_id: 44

priority: "{{ (inventory_hostname in groups['az3_all']) | ternary(200, (groups['haproxy']|length-groups['haproxy'].index(inventory_hostname))*50) }}"

vips:

- "{{ haproxy_keepalived_external_vip_cidr_az3 | default('169.254.1.1/24') }} dev {{ haproxy_keepalived_external_interface | default(management_bridge) }}"

track_scripts: "{{ keepalived_scripts | dict2items | json_query('[*].{name: key, instance: value.instance}') | rejectattr('instance', 'equalto', 'internal') | map(attribute='name') | list }}"

unicast_src_ip: "{{ keepalived_external_unicast_src_ip }}"

unicast_peers: "{{ keepalived_unicast_peers['external'] | difference([keepalived_external_unicast_src_ip]) }}"

az3-internal:

interface: "{{ haproxy_keepalived_internal_interface | default(management_bridge) }}"

state: "{{ (inventory_hostname in groups['az3_all']) | ternary('MASTER', 'BACKUP') }}"

virtual_router_id: 45

priority: "{{ (inventory_hostname in groups['az3_all']) | ternary(200, (groups['haproxy']|length-groups['haproxy'].index(inventory_hostname))*50) }}"

vips:

- "{{ haproxy_keepalived_internal_vip_cidr_az3 | default('169.254.2.1/24') }} dev {{ haproxy_keepalived_internal_interface | default(management_bridge) }}"

track_scripts: "{{ keepalived_scripts | dict2items | json_query('[*].{name: key, instance: value.instance}') | rejectattr('instance', 'equalto', 'external') | map(attribute='name') | list }}"

unicast_src_ip: "{{ keepalived_internal_unicast_src_ip }}"

unicast_peers: "{{ keepalived_unicast_peers['internal'] | difference([keepalived_internal_unicast_src_ip]) }}"

为了添加对多个计算层级(具有 CPU 超卖和固定 CPU)的支持,您需要创建一个文件 /etc/openstack_deploy/group_vars/pinned_compute_hosts,内容如下

nova_cpu_allocation_ratio: 1.0

nova_ram_allocation_ratio: 1.0

其余变量可以在 /etc/openstack_deploy/user_variables.yml 中定义,但其中许多变量将引用 az_name 变量,因此其存在(以及相应的组)对于此场景至关重要。

---

# Set a different scheduling AZ name on each controller

# You can change that to a specific AZ name which will be used as default one

default_availability_zone: "{{ az_name }}"

# Defining unique internal VIP in hosts per AZ

_openstack_internal_az_vip: "{{ hostvars[groups['haproxy'][0]]['haproxy_keepalived_internal_vip_cidr_' ~ az_name] | ansible.utils.ipaddr('address') }}"

openstack_host_custom_hosts_records: "{{ _openstack_services_fqdns['internal'] | map('regex_replace', '^(.*)$', _openstack_internal_az_vip ~ ' \\1') }}"

# Use local to AZ memcached inside of AZ

memcached_servers: >-

{{

groups['memcached'] | intersect(groups[az_name ~ '_containers'])

| map('extract', hostvars, 'management_address')

| map('regex_replace', '(.+)', '\1:' ~ memcached_port)

| list | join(',')

}}

# Ceph-Ansible variables

ceph_cluster_name: "ceph-{{ az_name }}"

ceph_keyrings_dir: "/etc/openstack_deploy/ceph/{{ ceph_cluster_name }}"

ceph_conf_file: "{{ lookup('file', ceph_keyrings_dir ~ '/ceph.conf') }}"

cluster: "{{ ceph_cluster_name }}"

cluster_network: "{{ public_network }}"

monitor_address: "{{ container_networks['storage_address']['address'] }}"

mon_group_name: "ceph_mon_{{ az_name }}"

mgr_group_name: "{{ mon_group_name }}"

osd_group_name: "ceph_osd_{{ az_name }}"

public_network: "{{ cidr_networks['storage_' ~ az_name] }}"

rgw_group_name: "ceph_rgw_{{ az_name }}"

rgw_zone: "{{ az_name }}"

# Cinder variables

cinder_active_active_cluster_name: "{{ ceph_cluster_name }}"

cinder_default_availability_zone: "{{ default_availability_zone }}"

cinder_storage_availability_zone: "{{ az_name }}"

# Glance to use Swift as a backend

glance_default_store: swift

glance_use_uwsgi: False

# Neutron variables

neutron_availability_zone: "{{ az_name }}"

neutron_default_availability_zones:

- az1

- az2

- az3

neutron_ovn_distributed_fip: True

neutron_plugin_type: ml2.ovn

neutron_plugin_base:

- ovn-router

- qos

- auto_allocate

neutron_ml2_drivers_type: geneve,vlan

neutron_provider_networks:

network_types: "{{ neutron_ml2_drivers_type }}"

network_geneve_ranges: "1:65000"

network_vlan_ranges: >-

vlan:100:200

network_mappings: "vlan:br-vlan"

network_interface_mappings: "br-vlan:bond0"

# Nova variables

nova_cinder_rbd_inuse: True

nova_glance_rbd_inuse: false

nova_libvirt_images_rbd_pool: ""

nova_libvirt_disk_cachemodes: network=writeback,file=directsync

nova_libvirt_hw_disk_discard: unmap

nova_nova_conf_overrides:

DEFAULT:

default_availability_zone: "{{ default_availability_zone }}"

default_schedule_zone: "{{ default_availability_zone }}"

cinder:

cross_az_attach: false

# Create required aggregates and flavors

cpu_pinned_flavors:

specs:

- name: pinned.small

vcpus: 2

ram: 2048

- name: pinned.medium

vcpus: 4

ram: 8192

extra_specs:

hw:cpu_policy: dedicated

hw:vif_multiqueue_enabled: 'true'

trait:CUSTOM_PINNED_CPU: required

cpu_shared_flavors:

specs:

- name: shared.small

vcpus: 1

ram: 1024

- name: shared.medium

vcpus: 2

ram: 4096

openstack_user_compute:

flavors:

- "{{ cpu_shared_flavors }}"

- "{{ cpu_pinned_flavors }}"

aggregates:

- name: az1-shared

hosts: "{{ groups['az1_shared_compute_hosts'] }}"

availability_zone: az1

- name: az1-pinned

hosts: "{{ groups['az1_pinned_compute_hosts'] }}"

availability_zone: az1

metadata:

trait:CUSTOM_PINNED_CPU: required

pinned-cpu: 'true'

- name: az2-shared

hosts: "{{ groups['az2_shared_compute_hosts'] }}"

availability_zone: az2

- name: az3-shared

hosts: "{{ groups['az3_shared_compute_hosts'] }}"

availability_zone: az3